Google Research has been hard at work on a new project that would allow people with Android devices to work together on big screens in real time without cumbersome file sharing. Even though cloud storage makes this process a little easier than in the past, Google feels that “there are not enough ways to easily move tasks across devices that are as intuitive as drag-and-drop in a graphical user interface.”

The company is hard at work on the Open Project, which is a framework that allows native mobile apps to be projected onto a screen. It works a little bit like Apple’s AirPlay, but in a much different way.

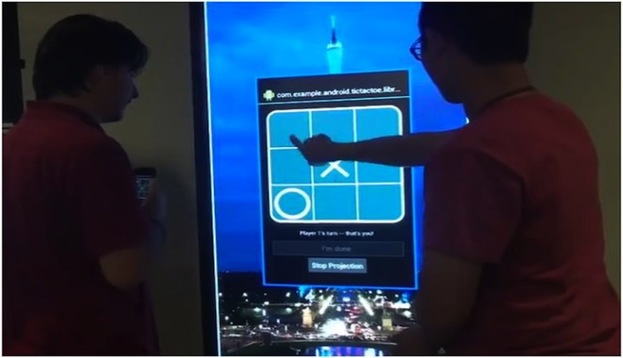

Open Project allows for display with a QR code, with a simple point at the app you want to use with it. From there, the code is scanned and the app displays on the screen. You can still interact with it, as you would with a touch device. The video shows users collaborating and even playing a game on a big screen using their phones, with no additional hardware or complicated processes.

Google isn’t sure when this technology would be a reality, but considering its effectiveness, one would think it isn’t too far off.

Source: TechCrunch